Screaming Frog is a well known and powerful tool for SEOs, it allows them to crawl and analyse websites deeply but using their some what old school user interface and managing the extensive data it generates can be underwhelming. So, here is a way to automate the process of organising issue reports in Google Sheets and creating hyperlinks for easy navigation, you can streamline your workflows and save significant time, and just spend time doing the fun stuff… analysing the data inline with Google Search Console indexing report and putting best practice suggestions together for you, your client, or engineers.

Table of Contents

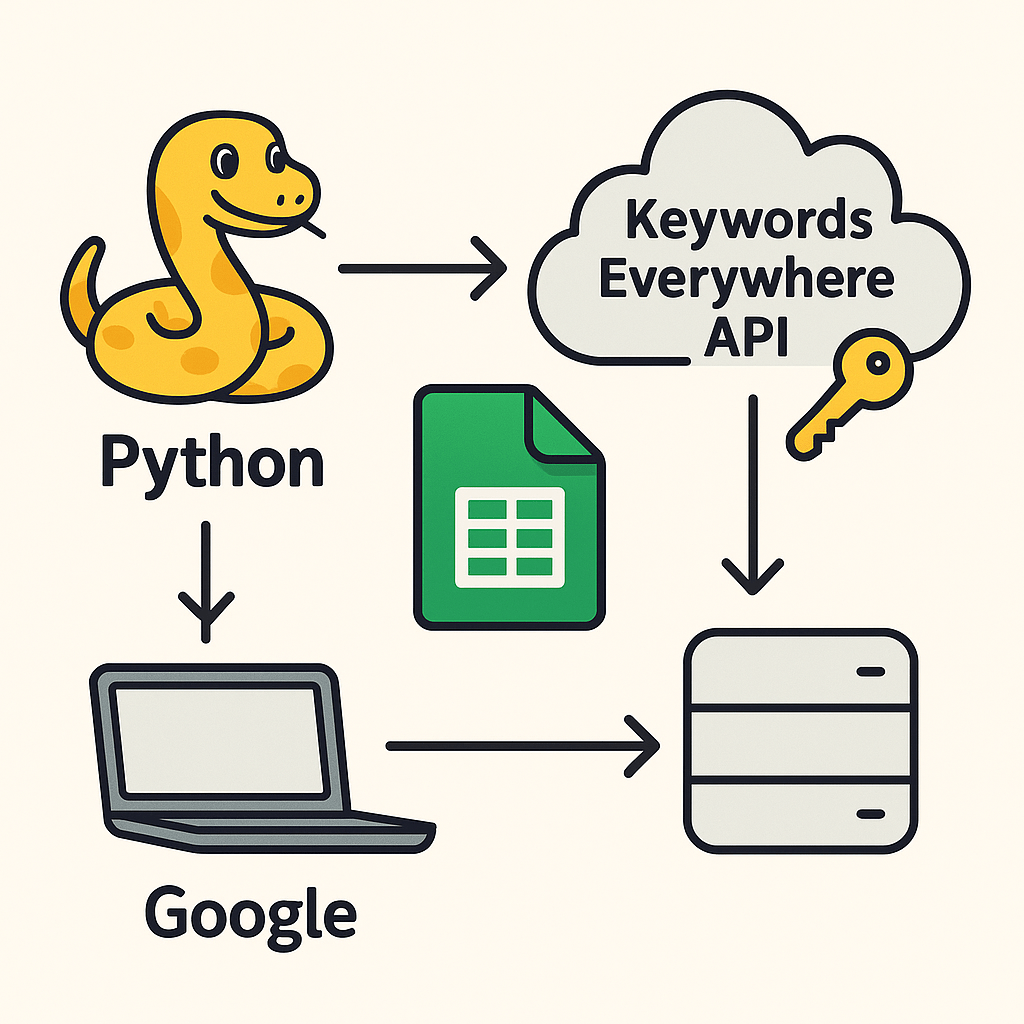

This guide walks you through a Python-based solution to:

- Organise Screaming Frog issues data in Google Sheets.

- Link each issue in the Summary tab to its respective issue tab.

- Make data exploration fast, efficient, and user-friendly.

Screaming Frog have published a relevant article teching you ‘How To Automate Crawl Reports In Looker Studio‘, I suggest checking that out if you want to automate data it into a reporting dashboard.

Step 1: Organise Screaming Frog Issue Data in Google Sheets

The first step is to process the Issues Overview Report exported from Screaming Frog. This report contains a high-level summary of all issues found on the website, including issue names, descriptions, priority levels, and affected URLs. Our Python script will:

- Create a Summary tab in Google Sheets containing all rows and columns from the Issues Overview Report.

- Create individual tabs for each unique issue, with only the rows relevant to that specific issue.

Step 2: Populate Tabs with Issue-Specific Data

Screaming Frog allows you to export detailed CSV files for each issue. For instance:

- H1 Duplicate has a CSV file listing all URLs with duplicate H1 tags.

- Images Missing Alt Text has a CSV file with URLs for images missing

altattributes.

The script processes these CSV files, matches them to their respective tabs in the Google Sheet, and pastes the data starting from row 4 to leave space for issues’s overview information.

Step 3: Add Hyperlinks in the Summary Tab

To make navigation easier, we add hyperlinks in the “Issue Name” column of the Summary tab. Each hyperlink points to the corresponding tab for that issue, enabling quick access with a single click.

For example:

- Clicking on “H1 Duplicate” in the Summary tab will take you to the H1 Duplicate tab, where all URLs for this issue are listed.

How It Works

1. Process the Issues Overview Report

The script first reads the issues_overview_report.csv file and processes it to:

- Create a

Summarytab containing all columns and rows. - Create tabs for each unique issue name, filtered to include only rows related to that issue.

2. Match Issue-Specific CSV Files

Next, the script reads all issue-specific CSV files from a folder, matches them to the respective tabs using fuzzy matching, and pastes the data in the respective issues tab.

3. Add Hyperlinks to the Summary Tab

Finally, the script scans the “Issue Name” column in the Summary tab and adds hyperlinks that point to the corresponding issue tabs. This makes it easy to navigate between the high-level overview and the detailed data for each issue.

The Python Scripts

Here are the scripts that automate these tasks.

Script 1: Process Screaming Frog Data

import os

import pandas as pd

import gspread

from fuzzywuzzy import process

from oauth2client.service_account import ServiceAccountCredentials

import time

def connect_to_google_sheets(sheet_name):

scope = [

"https://spreadsheets.google.com/feeds",

"https://www.googleapis.com/auth/drive"

]

creds = ServiceAccountCredentials.from_json_keyfile_name("{REPLACE}-credentials.json", scope)

client = gspread.authorize(creds)

# Connect to an existing Google Sheet by name or create it if it doesn't exist

try:

sheet = client.open(sheet_name)

print(f"Connected to existing Google Sheet: {sheet_name}")

except gspread.exceptions.SpreadsheetNotFound:

sheet = client.create(sheet_name)

print(f"Created new Google Sheet: {sheet_name}")

# Share the Google Sheet with an email address

sheet.share('You@YourEmailAddress.com', perm_type='user', role='writer')

sheet.share('YouColleague@TheirEmailAddress.com', perm_type='user', role='writer')

return sheet

def clean_name(name):

"""Cleans and normalizes a name for matching."""

return name.strip().lower().replace("_", " ").replace("-", " ").replace(":", "").replace(",", "").replace(".csv", "")

def write_to_sheet(sheet, tab_name, df, retries=3, start_row=1):

df = df.fillna("") # Replace NaN values with empty strings

try:

# Check if the worksheet already exists

worksheet = sheet.worksheet(tab_name)

print(f"Worksheet '{tab_name}' already exists. Updating its contents.")

except gspread.exceptions.WorksheetNotFound:

# Create the worksheet if it doesn't exist

for attempt in range(retries):

try:

worksheet = sheet.add_worksheet(title=tab_name, rows="1000", cols="26")

print(f"Created new worksheet '{tab_name}'.")

break

except gspread.exceptions.APIError as e:

if attempt < retries - 1:

print(f"API error on attempt {attempt + 1}: {e}. Retrying...")

time.sleep(5) # Wait before retrying

else:

print(f"Failed to create worksheet '{tab_name}' after {retries} attempts.")

return

# Write data in a single operation (clear + update in one go)

try:

worksheet.resize(rows=len(df) + start_row - 1, cols=len(df.columns))

values = [df.columns.values.tolist()] + df.values.tolist()

worksheet.update(f"A{start_row}", values)

except gspread.exceptions.APIError as e:

print(f"Failed to update worksheet '{tab_name}': {e}")

time.sleep(2) # Add delay to avoid hitting API rate limits

def process_overview_report(issues_overview_path, sheet_name):

# Load the Issues Overview Report

issues_overview_df = pd.read_csv(issues_overview_path)

# Connect to the existing Google Sheet

sheet = connect_to_google_sheets(sheet_name)

# Step 1: Write the Summary tab

write_to_sheet(sheet, "Summary", issues_overview_df, start_row=1)

print("Summary tab updated.")

# Step 2: Create a tab for each unique issue

for issue_name in issues_overview_df["Issue Name"].unique():

# Filter rows for the specific issue

issue_df = issues_overview_df[issues_overview_df["Issue Name"] == issue_name]

# Write the filtered rows to a tab

write_to_sheet(sheet, issue_name[:50], issue_df, start_row=1)

print(f"Created tab for issue: {issue_name}")

def process_issue_csvs(folder_path, sheet_name):

# Connect to the existing Google Sheet

sheet = connect_to_google_sheets(sheet_name)

# Cache tab names to avoid repeated API calls

tab_cache = [worksheet.title for worksheet in sheet.worksheets()]

# Iterate through each CSV file in the folder

for file_name in os.listdir(folder_path):

if file_name.endswith(".csv"):

# Clean the file name for matching

issue_name = file_name.replace(".csv", "").replace("_", " ").capitalize()

# Find an exact match in the tab names

matched_tab_name = process.extractOne(issue_name, tab_cache, score_cutoff=70)

if not matched_tab_name:

print(f"Warning: No matching tab found for issue '{file_name}'. Skipping...")

continue

matched_tab_name = matched_tab_name[0] # Extract the matched tab name

# Load the CSV file

file_path = os.path.join(folder_path, file_name)

issue_df = pd.read_csv(file_path)

# Append data from the CSV file to the matched tab starting at row 4

write_to_sheet(sheet, matched_tab_name, issue_df, start_row=4)

print(f"Processed and updated tab for issue: {matched_tab_name}")

print(f"Google Sheet '{sheet_name}' updated with all issues.")

if __name__ == "__main__":

# File path to the Screaming Frog Issues Overview Report (step 1 file)

issues_overview_path = "issues_overview_report.csv"

# Folder path to the issue-specific CSV files (step 2 files)

issues_folder_path = "/{full-folder-path}/issues_reports"

# Name of the Google Sheet (same for both steps)

google_sheet_name = "Name Your Sheet"

# Step 1: Process the Issues Overview Report

process_overview_report(issues_overview_path, google_sheet_name)

# Step 2: Process the issue-specific CSV files

process_issue_csvs(issues_folder_path, google_sheet_name)

This script connects you to GoogleSheets through the Google Sheets API, you’ll need to create your own credentials.json file and store this in the same folder that the script is run from, and then it processes the Issues Overview Report and the issue-specific CSV files from Screaming Frog, matching them to their respective tabs.

Head on over to https://medium.com/@a.marenkov/how-to-get-credentials-for-google-sheets-456b7e88c430#:~:text=Press%20’CREATE%20CREDENTIALS’%20and%20select,to%20the%20list%20of%20credentials. to find out how to create your own credentials.json file.

Script 2: Add Hyperlinks to the Summary Tab

import gspread

from oauth2client.service_account import ServiceAccountCredentials

import time

def connect_to_google_sheets(sheet_name):

scope = [

"https://spreadsheets.google.com/feeds",

"https://www.googleapis.com/auth/drive"

]

creds = ServiceAccountCredentials.from_json_keyfile_name("credentials.json", scope)

client = gspread.authorize(creds)

# Connect to an existing Google Sheet by name

try:

sheet = client.open(sheet_name)

print(f"Connected to existing Google Sheet: {sheet_name}")

return sheet

except gspread.exceptions.SpreadsheetNotFound:

print(f"Google Sheet '{sheet_name}' not found.")

return None

def fetch_tab_gid(sheet):

"""Fetches the mapping of tab names to their gids."""

tabs = {}

metadata = sheet.fetch_sheet_metadata()

for sheet_data in metadata["sheets"]:

tab_name = sheet_data["properties"]["title"]

gid = sheet_data["properties"]["sheetId"]

tabs[tab_name] = gid

return tabs

def add_hyperlinks_to_summary(sheet, summary_tab_name="Summary"):

try:

# Get the Summary tab

worksheet = sheet.worksheet(summary_tab_name)

print(f"Found worksheet '{summary_tab_name}'.")

except gspread.exceptions.WorksheetNotFound:

print(f"Worksheet '{summary_tab_name}' not found. Exiting...")

return

# Get all data from the Summary tab

data = worksheet.get_all_values()

headers = data[0]

rows = data[1:] # Skip headers

# Find the index of the "Issue Name" column

try:

issue_name_index = headers.index("Issue Name")

except ValueError:

print("'Issue Name' column not found in Summary tab.")

return

# Fetch the gid for each tab in the sheet

tabs_with_gid = fetch_tab_gid(sheet)

print(f"Tabs and their gids: {tabs_with_gid}")

# Add hyperlinks to each Issue Name

for i, row in enumerate(rows, start=2): # Start from row 2 (to skip headers)

issue_name = row[issue_name_index]

if issue_name.strip() and issue_name in tabs_with_gid: # Ensure it's not empty and tab exists

# Generate the hyperlink pointing to the gid of the tab

gid = tabs_with_gid[issue_name]

formula = f'=HYPERLINK("#gid={gid}", "{issue_name}")'

worksheet.update_cell(i, issue_name_index + 1, formula)

print(f"Added hyperlink for '{issue_name}' pointing to gid '{gid}'.")

else:

print(f"Skipping '{issue_name}' - Tab not found.")

# Add delay to avoid hitting API rate limits

time.sleep(1)

print("Hyperlinks added to the Summary tab.")

if __name__ == "__main__":

# Name of the Google Sheet

google_sheet_name = "NAME OF YOUR SHEET"

# Connect to the Google Sheet

sheet = connect_to_google_sheets(google_sheet_name)

# Add hyperlinks to the Summary tab

if sheet:

add_hyperlinks_to_summary(sheet)

This script adds clickable links in the Summary tab, directing users to the corresponding tabs for each issue.

Benefits of This Automation

- Saves Time:

- Automates tedious tasks like creating tabs and linking them.

- Processes hundreds of rows in seconds.

- Improves Accuracy:

- Reduces human errors when manually copying and organising data.

- Streamlines Navigation:

- Hyperlinks in the Summary tab to help navigation.

- Customisable:

- Adapt the scripts to your specific needs, such as custom headers or formatting.

How to Use the Scripts

- Create a credentials.json file for Google Sheets.

- Set Up the Environment:

- Install Python and the required libraries (

pandas,gspread,fuzzywuzzy, etc.). - Download your Screaming Frog Issues Overview Report and issue-specific CSV files.

- Install Python and the required libraries (

- Run the Scripts:

- Use Script 1 to process the data and organise it into tabs.

- Use Script 2 to add hyperlinks in the Summary tab.

- Verify the Output:

- Open your Google Sheet and confirm that:

- The Summary tab is complete.

- Each issue has its own tab with relevant data.

- Hyperlinks in the Summary tab point to the correct tabs.

- Open your Google Sheet and confirm that:

This script doesn’t only simplify the management of Screaming Frog data but it also enhances the efficiency of your SEO workflows. By leveraging Python and Google Sheets, the old-school UI in Screaming Frog isn’t a thing anymore, and their issue reports can be exported into a well-structured, easy-to-navigate resource that saves time and let’s you get on with the analysis and audit.