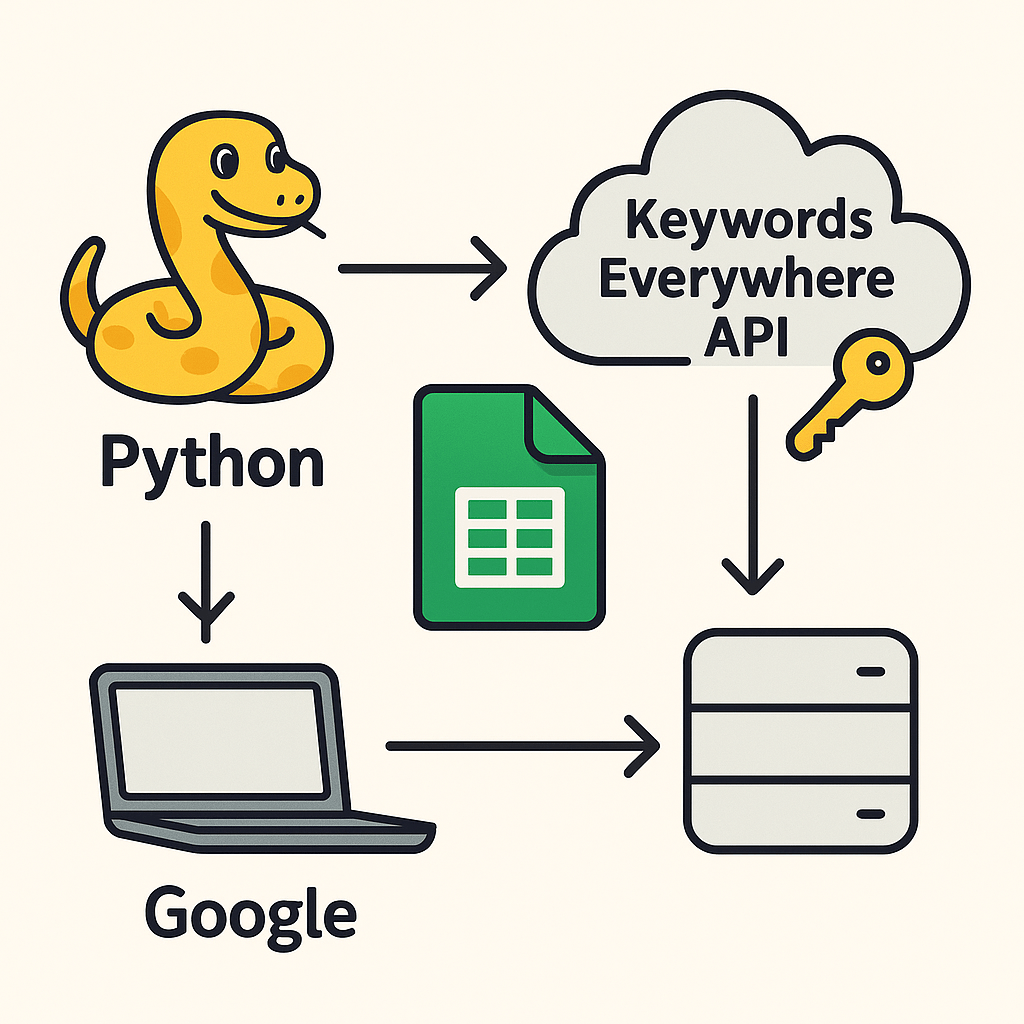

In any SEO and website migration project, accurately mapping old URLs to new ones is critical for maintaining search engine rankings and minimising disruptions. Google’s own guidance on site moves with URL changes stresses the importance of carefully planned redirects when URLs change.

The following Python scripts show how to streamline this process by comparing page titles or on-page content for similarity and generating a URL mapping file that can feed into a 301 redirect plan. They are designed as practical helpers – not full replacements for human review – so that SEO specialists and developers can focus their time on edge cases and strategy rather than manual copying and pasting.

Table of contents

- Script overview: page title matching for URL mapping

- Extending the script for content matching

- Limitations, performance and best practice

- Frequently asked questions

- External resources and further reading

Script overview: page title matching for URL mapping

This Python script is designed to match old URLs to new URLs based on page titles using fuzzy matching techniques. By automating this part of a migration, it saves time and reduces the risk of manual errors when you are mapping hundreds of similar-looking URLs.

Key features

- Fetch page titles: Uses

requestsandBeautifulSoupto extract page titles from provided URLs. For more detail, see the Requests quickstart and the Beautiful Soup documentation. - Fuzzy matching: Leverages the

RapidFuzzlibrary’sfuzz.ratioto calculate similarity scores between old and new page titles. See the RapidFuzz fuzz.ratio docs for details. - CSV input/output: Reads old and new URLs from a CSV file and generates a mapped output file with match scores using

pandas.read_csvandDataFrame.to_csv. You can find more on this in the pandas read_csv documentation. - Custom threshold: Allows users to set a similarity threshold (0–100) for better control over matches, so only strong title matches are used.

Code implementation

Below is the script for page title-based URL mapping:

import requests

from bs4 import BeautifulSoup

import pandas as pd

from rapidfuzz import fuzz

def fetch_page_title(url):

"""

Fetches the page title for a given URL.

Uses the Requests library to retrieve the HTML and BeautifulSoup to parse

the <title> element. For production use you may want to:

- Add a custom User-Agent header

- Handle retries / backoff for transient errors

- Respect robots.txt and crawl-delay settings

"""

try:

response = requests.get(url, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.text, "html.parser")

title = soup.title.string.strip() if soup.title else None

return title

except Exception as e:

print(f"Error fetching title for {url}: {e}")

return None

def map_urls_by_titles(old_urls, new_urls, threshold=80):

"""

Maps old URLs to new URLs based on their page titles using fuzzy matching.

Parameters:

old_urls (list): List of old URLs to map from.

new_urls (list): List of new URLs to map to.

threshold (int): Minimum similarity score to consider a match (0-100).

Returns:

DataFrame: A mapping of old URLs to new URLs based on page titles

and match scores.

Notes:

- This script works well for small to medium sets of URLs

(hundreds to a few thousand).

- For very large migrations, consider batching, caching, or more

advanced RapidFuzz APIs to improve performance.

"""

old_titles = {url: fetch_page_title(url) for url in old_urls}

new_titles = {url: fetch_page_title(url) for url in new_urls}

mappings = []

# Create mapping by fuzzy matching titles

for old_url, old_title in old_titles.items():

best_match = None

highest_score = 0

for new_url, new_title in new_titles.items():

if old_title and new_title:

# Calculate similarity score (0-100)

score = fuzz.ratio(old_title, new_title)

if score > highest_score:

highest_score = score

best_match = new_url

# Add match details to the mapping

mappings.append(

{

"Old URL": old_url,

"Old Title": old_title,

"New URL": best_match if highest_score >= threshold else None,

"New Title": new_titles.get(best_match, None)

if best_match

else None,

"Match Score": highest_score,

}

)

return pd.DataFrame(mappings)

def read_urls_from_csv(file_path):

"""

Reads old and new URLs from a CSV file.

The CSV should have two columns: 'Old URL' and 'New URL'.

Example format:

Old URL,New URL

https://oldsite.com/page1,https://newsite.com/page-a

https://oldsite.com/page2,https://newsite.com/page-b

"""

try:

data = pd.read_csv(file_path)

old_urls = data["Old URL"].dropna().tolist()

new_urls = data["New URL"].dropna().tolist()

return old_urls, new_urls

except Exception as e:

print(f"Error reading URLs from CSV: {e}")

return [], []

if __name__ == "__main__":

# Input and output file paths

input_csv = "urls.csv" # Replace with your CSV file path

output_csv = "url_mapping.csv"

# Read URLs from the CSV file

old_urls, new_urls = read_urls_from_csv(input_csv)

if not old_urls or not new_urls:

print("No URLs found in the input file. Please check the CSV format.")

else:

# Generate the URL mapping

url_mapping = map_urls_by_titles(old_urls, new_urls, threshold=80)

# Save the mapping to a CSV file

url_mapping.to_csv(output_csv, index=False)

print(f"URL mapping saved to {output_csv}")

Steps to use the title-matching script

- Install required libraries

Install the Python libraries if they are not already available in your environment:pip install requests beautifulsoup4 pandas rapidfuzz - Prepare the input CSV

Create a CSV file (for exampleurls.csv) with two columns:Old URLandNew URL. Each row should represent a potential mapping candidate between an old URL and the new URL that may replace it:Old URL,New URL https://oldsite.com/page1,https://newsite.com/page-a https://oldsite.com/page2,https://newsite.com/page-b https://oldsite.com/page3,https://newsite.com/page-cThe script will use the titles of these URLs to suggest the best match for each old URL. - Run the script

Save the script to a file, for exampleseo_migration_titles.py, in the same directory as your CSV file, and run:python seo_migration_titles.py - Review the output

The script generates a file such asurl_mapping.csvcontaining:- Old URL

- Old Title

- New URL (if a match passes the threshold)

- New Title

- Match Score (0–100)

Why might some URLs be missing from the output?

- Threshold too high: If the similarity score does not meet the threshold (default is 80), the

New URLwill beNone. Lower the threshold slightly (for example to 70–75) if you want to see more candidate matches, then manually review. - Titles not found: If the script fails to fetch page titles for the URLs (due to timeouts, blocked requests, incorrect URLs or empty titles), matching cannot proceed. Check those URLs manually or adjust your timeout / error handling.

Extending the script for content matching

To go beyond page titles, the script can also compare other on-page elements such as meta descriptions, H1s, body text, and image alt attributes. This can be useful when titles are generic or have been rewritten during a redesign.

The following script demonstrates a more in-depth content comparison. Note that this version calculates a composite similarity score by summing the similarity of several components; it is not restricted to a 0–100 range. That means the threshold is a minimum total score rather than a simple percentage.

import requests

from bs4 import BeautifulSoup

import pandas as pd

from rapidfuzz import fuzz

def fetch_page_content(url):

"""

Fetches on-page content for a given URL, including title, meta description,

H1, body text, and image alt attributes.

"""

try:

response = requests.get(url, timeout=10)

response.raise_for_status()

soup = BeautifulSoup(response.text, "html.parser")

# Extract relevant content

title = soup.title.string.strip() if soup.title else None

meta_description = soup.find("meta", attrs={"name": "description"})

meta_description = (

meta_description["content"].strip() if meta_description else None

)

h1 = soup.find("h1")

h1 = h1.text.strip() if h1 else None

body_text = " ".join([p.text.strip() for p in soup.find_all("p")])

images = [img["alt"] for img in soup.find_all("img", alt=True)]

return {

"title": title,

"meta_description": meta_description,

"h1": h1,

"body_text": body_text,

"images": images,

}

except Exception as e:

print(f"Error fetching content for {url}: {e}")

return None

def calculate_similarity(old_content, new_content):

"""

Calculates a composite similarity score between two sets of page content.

The score is a sum of:

- Title similarity (0-100, if present)

- Meta description similarity (0-100, if present)

- H1 similarity (0-100, if present)

- Body text similarity (0-100, if present)

- Image alt text similarity (sum of best matches per image)

As a result, the total score can exceed 100. In practice, strong matches

often end up in the low hundreds, depending on how many components are

present and how similar they are.

"""

total_score = 0

components = ["title", "meta_description", "h1", "body_text"]

# Compare textual components

for component in components:

old = old_content.get(component, "")

new = new_content.get(component, "")

if old and new:

total_score += fuzz.ratio(old, new)

# Compare images (using alt text similarity)

old_images = old_content.get("images", [])

new_images = new_content.get("images", [])

image_score = 0

if old_images and new_images:

for old_img in old_images:

best_img_score = max(fuzz.ratio(old_img, new_img) for new_img in new_images)

image_score += best_img_score

total_score += image_score

return total_score

def map_urls_by_content(old_urls, new_urls, threshold=200):

"""

Maps old URLs to new URLs based on the closest match of on-page content.

Parameters:

old_urls (list): List of old URLs to map from.

new_urls (list): List of new URLs to map to.

threshold (int): Minimum composite similarity score to consider a match.

Returns:

DataFrame: A mapping of old URLs to new URLs based on content

similarity scores.

Notes:

- Because the similarity score is a sum of multiple components,

typical "good" matches may land somewhere between 200-400,

depending on how much content is on the page.

- Start with a threshold around 200-250, inspect the results,

then adjust up or down based on your site.

"""

old_contents = {url: fetch_page_content(url) for url in old_urls}

new_contents = {url: fetch_page_content(url) for url in new_urls}

mappings = []

for old_url, old_content in old_contents.items():

best_match = None

highest_score = 0

for new_url, new_content in new_contents.items():

if old_content and new_content:

score = calculate_similarity(old_content, new_content)

if score > highest_score:

highest_score = score

best_match = new_url

# Add match details to the mapping

mappings.append(

{

"Old URL": old_url,

"New URL": best_match if highest_score >= threshold else None,

"Similarity Score": highest_score,

}

)

return pd.DataFrame(mappings)

def read_urls_from_csv(file_path):

"""

Reads old and new URLs from a CSV file.

The CSV should have two columns: 'Old URL' and 'New URL'.

"""

try:

data = pd.read_csv(file_path)

old_urls = data["Old URL"].dropna().tolist()

new_urls = data["New URL"].dropna().tolist()

return old_urls, new_urls

except Exception as e:

print(f"Error reading URLs from CSV: {e}")

return [], []

if __name__ == "__main__":

# Input and output file paths

input_csv = "urls.csv" # Replace with your CSV file path

output_csv = "url_mapping_content.csv"

# Read URLs from the CSV file

old_urls, new_urls = read_urls_from_csv(input_csv)

if not old_urls or not new_urls:

print("No URLs found in the input file. Please check the CSV format.")

else:

# Generate the URL mapping

url_mapping = map_urls_by_content(old_urls, new_urls, threshold=200)

# Save the mapping to a CSV file

url_mapping.to_csv(output_csv, index=False)

print(f"URL mapping saved to {output_csv}")

By analysing these additional components, SEO specialists can ensure even closer matches between old and new URLs during migrations, particularly on content-heavy sites where titles alone are not enough.

Limitations, performance and best practice

These scripts are intended as practical aids to accelerate URL mapping, not as fully autonomous redirect engines. To keep migrations safe and aligned with best practice, keep the following in mind:

- Always perform human QA: Treat the output CSV as a set of suggestions. Review high-value and borderline mappings manually before implementing redirects, and spot-check samples from lower-traffic areas.

- Scale and performance: Both approaches do pairwise comparisons between old and new URLs, which means they are roughly

O(n²). They are usually fine for hundreds or a few thousand URLs, but for very large sites you may need:- More efficient matching strategies (for example, RapidFuzz’s process helpers or blocking by directory / section).

- Caching of fetched HTML to avoid repeated downloads.

- Batching the migration by directory or site section.

- Respect crawling etiquette: When fetching pages at scale, make sure you:

- Respect the site’s

robots.txtand any crawl-delay guidance. - Use a reasonable timeout and rate limiting.

- Send an appropriate User-Agent string and avoid overwhelming servers.

- Respect the site’s

- Redirect implementation: Once mappings have been reviewed, implement 301 redirects in line with Google’s site move and redirect guidance:

- Avoid redirect chains and loops where possible.

- Keep old URLs redirecting for a suitable period after the migration.

- Monitor Search Console for crawl errors and coverage changes.

Frequently asked questions

Does this script replace a manual redirect audit?

No. The scripts are designed to reduce repetitive work and highlight likely matches, but a human still needs to review critical mappings, handle edge cases, and decide how to treat URLs that have no clear destination.

What similarity threshold should I use?

For the title-based script, a threshold of around 80 is a good starting point. If you find too few matches, experiment with 70–75 and review additional candidates manually.

For the content-based script, the score is a sum across multiple components, so typical strong matches may land in the 200–400 range. Start around 200–250, inspect the results, then adjust the threshold up or down based on how conservative you want to be.

Can I use these scripts for very large websites?

In principle, yes – but out of the box they are best suited to small-to-medium migrations. For large sites (tens of thousands of URLs or more), consider processing one section at a time, caching responses, and using more advanced RapidFuzz utilities to avoid doing every possible pairwise comparison.

Where should I implement the redirects?

That depends on your stack. Common options include web server configuration (for example, Apache or Nginx), a reverse proxy, a CMS-level redirect manager, or edge-worker logic on a CDN. Whatever the mechanism, make sure redirects are tested in a staging environment before going live.