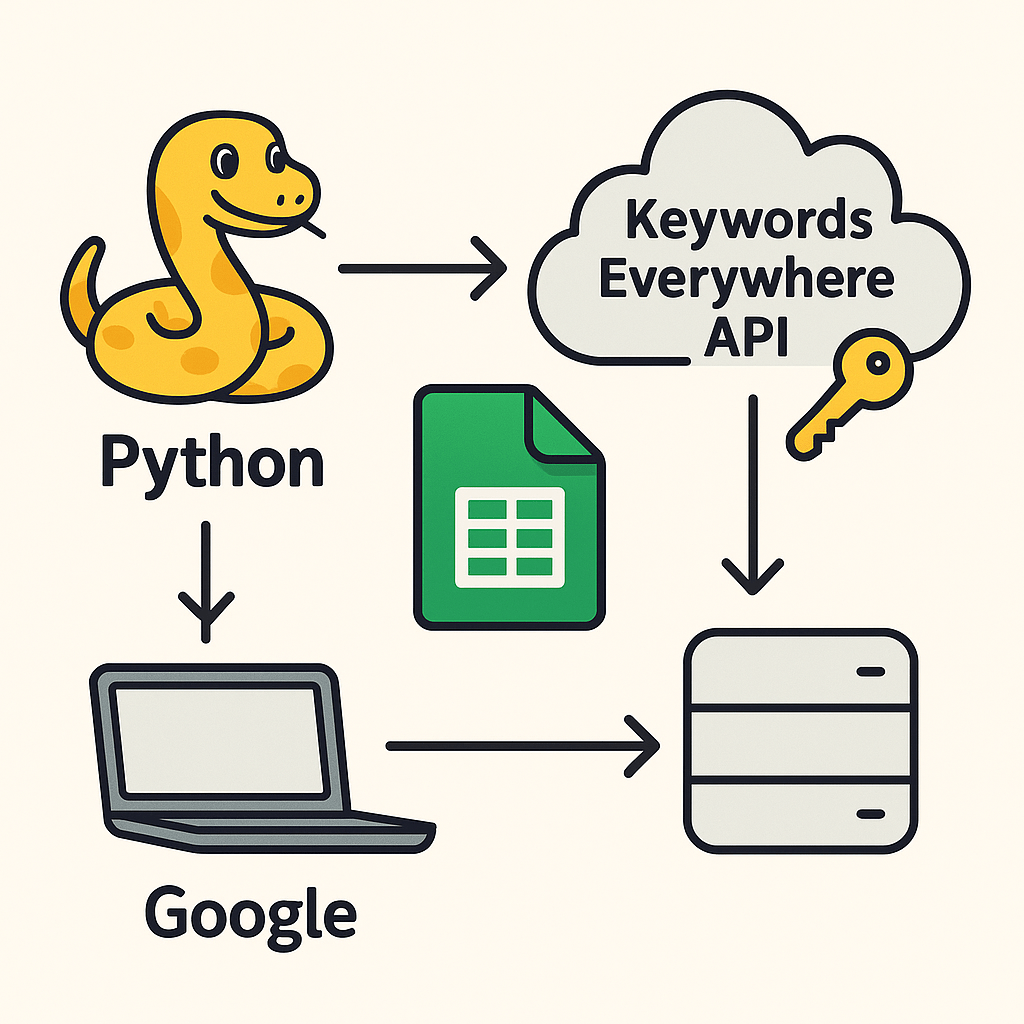

If you’re managing a large list of URLs and want to quickly check their canonical URLs but do not have access to a website crawler such as Screaming Frog and the IMPORTXML function is taking ages or failing then you may want to consider doing this with Python…

Table of Contents

This guide will walk through how to connect Python to a Google Sheet, scan through a column of URLs, extract each page’s canonical URL, and write the results back to the sheet in one click of a button.

What it Does

- Connect to your Google Sheet using the Google Sheets API

- Scan an entire column for valid URLs

- Fetch and parse each URL’s HTML to extract the

<link rel="canonical">tag - Write results into a new column next to your URLs

- Automatically handle errors, headers, and batching to avoid API limits

What You Need

- A Google Cloud project with Sheets API enabled

- A service account key (JSON file)

- Python 3 installed with the following libraries:

pip install gspread google-auth requests beautifulsoup4

Setting Up the Google Sheets API

- Go to the Google Cloud Console.

- Create a new project or select an existing one.

- Navigate to APIs & Services > Library, and enable Google Sheets API.

- Go to Credentials > Create credentials > Service account.

- Generate a key as a JSON file.

- Share your Google Sheet with the service account’s email (e.g.

my-bot@project-id.iam.gserviceaccount.com) with Editor access.

Python Script to Fetch Canonical URLs

Here’s the full working script:

import gspread

from google.oauth2.service_account import Credentials

import requests

from bs4 import BeautifulSoup

from datetime import datetime

# Google Sheets API Setup

SERVICE_ACCOUNT_FILE = "path/to/your-service-account.json" # <-- Change this

SCOPES = ["https://www.googleapis.com/auth/spreadsheets"]

creds = Credentials.from_service_account_file(SERVICE_ACCOUNT_FILE, scopes=SCOPES)

client = gspread.authorize(creds)

# Open your Google Sheet

SPREADSHEET_ID = "your_google_sheet_id" # <-- Change this

SHEET_NAME = "Sheet1" # <-- Or your actual sheet name

sheet = client.open_by_key(SPREADSHEET_ID).worksheet(SHEET_NAME)

# Read all values from Column A

raw_urls = sheet.col_values(1) # Entire Column A

urls = [(i + 1, url.strip()) for i, url in enumerate(raw_urls) if url.strip().startswith(("http://", "https://"))]

if not urls:

print("No valid URLs found in column A.")

exit()

# Function to get the canonical URL from a page

def get_canonical_url(url):

try:

headers = {"User-Agent": "Mozilla/5.0"}

response = requests.get(url, headers=headers, timeout=10)

soup = BeautifulSoup(response.text, "html.parser")

canonical_tag = soup.find("link", rel="canonical")

return canonical_tag["href"] if canonical_tag else url

except Exception as e:

print(f"Error fetching {url}: {e}")

return "Error"

# Fetch all canonical URLs

canonical_results = [(row, get_canonical_url(url)) for row, url in urls]

# Check if Column B is empty or in use

existing_column_b = sheet.col_values(2)

if len(existing_column_b) == 0:

column_b = "B"

else:

sheet.insert_cols([], col=2) # Insert new column at B

column_b = "B"

# Add header with timestamp

header_name = f"Canonical URLs {datetime.now().strftime('%Y-%m-%d %H:%M')}"

sheet.update(values=[[header_name]], range_name=f"{column_b}1")

# Prepare values to write

update_values = [[canonical_url] for _, canonical_url in canonical_results]

start_row = min(row for row, _ in canonical_results)

end_row = max(row for row, _ in canonical_results)

cell_range = f"{column_b}{start_row}:{column_b}{end_row}"

# Batch update (avoids quota errors)

sheet.batch_update([{"range": cell_range, "values": update_values}])

print(f"Canonical URLs added to Column {column_b}")

Built-In Safeguards

- Skips blank or invalid rows (e.g., text-only cells).

- Catches timeouts or HTTP errors and returns

"Error"in the sheet. - Prevents quota errors by using efficient batch updates.

- Adds a timestamped header for easy version tracking.

- Inserts a new column only if necessary.

Example Output

| A (Input URL) | B (Canonical URL) |

|---|---|

https://example.com/test | https://example.com/canonical-test |

https://other.com/about | https://other.com/about |

Use Cases

- SEO audits for verifying canonical tags for large websites on Google Sheets

- Duplicate content checks

- Content migration QA

- Batch URL validation before redirects

Advanced Script

If your sheet has 100 thousands of URLs, you may want to use this script instead as it incorporates:

- Retry logic for failed fetches

- Multi-threading or async requests for speed

- Caching to avoid hitting the same URLs repeatedly

Required Libraries

pip install aiohttp

The Script

import asyncio

import aiohttp

import gspread

from google.oauth2.service_account import Credentials

from bs4 import BeautifulSoup

from datetime import datetime

from collections import defaultdict

# === CONFIG ===

SERVICE_ACCOUNT_FILE = "path/to/your-service-account.json" # <-- Change this

SPREADSHEET_ID = "your_google_sheet_id" # <-- Change this

SHEET_NAME = "Sheet1"

MAX_CONCURRENT_REQUESTS = 10

RETRY_LIMIT = 3

# === Google Sheets Setup ===

SCOPES = ["https://www.googleapis.com/auth/spreadsheets"]

creds = Credentials.from_service_account_file(SERVICE_ACCOUNT_FILE, scopes=SCOPES)

client = gspread.authorize(creds)

sheet = client.open_by_key(SPREADSHEET_ID).worksheet(SHEET_NAME)

# === URL Fetching Logic ===

cache = {}

retry_counts = defaultdict(int)

async def fetch_canonical(session, url):

if url in cache:

return cache[url]

headers = {"User-Agent": "Mozilla/5.0"}

try:

async with session.get(url, headers=headers, timeout=10) as response:

if response.status != 200:

raise Exception(f"HTTP {response.status}")

html = await response.text()

soup = BeautifulSoup(html, "html.parser")

canonical_tag = soup.find("link", rel="canonical")

canonical = canonical_tag["href"] if canonical_tag else url

cache[url] = canonical

return canonical

except Exception as e:

if retry_counts[url] < RETRY_LIMIT:

retry_counts[url] += 1

return await fetch_canonical(session, url)

print(f"Failed to fetch {url} after {RETRY_LIMIT} attempts: {e}")

cache[url] = "Error"

return "Error"

async def process_urls(urls):

connector = aiohttp.TCPConnector(limit=MAX_CONCURRENT_REQUESTS)

async with aiohttp.ClientSession(connector=connector) as session:

tasks = [fetch_canonical(session, url) for url in urls]

return await asyncio.gather(*tasks)

# === Main Logic ===

# Read column A (Original URLs)

raw_urls = sheet.col_values(1)

rows_with_urls = [(i + 1, url.strip()) for i, url in enumerate(raw_urls) if url.strip().startswith(("http://", "https://"))]

if not rows_with_urls:

print("No valid URLs found in column A.")

exit()

row_numbers, urls = zip(*rows_with_urls)

# Process all canonical lookups concurrently

canonical_urls = asyncio.run(process_urls(urls))

# Insert new column if needed

existing_column_b = sheet.col_values(2)

if len(existing_column_b) > 0:

sheet.insert_cols([], col=2)

column_letter = "B"

# Add timestamped header

timestamp = datetime.now().strftime('%Y-%m-%d %H:%M')

header = f"Canonical URLs {timestamp}"

sheet.update(values=[[header]], range_name=f"{column_letter}1")

# Prepare values

output_rows = [[""] for _ in range(len(raw_urls))] # Ensure full sheet length

for row, canonical in zip(row_numbers, canonical_urls):

output_rows[row - 1] = [canonical]

# Define range and write with batch_update

start_row = 2

end_row = len(raw_urls)

sheet.batch_update([

{"range": f"{column_letter}{start_row}:{column_letter}{end_row}", "values": output_rows[start_row - 1:end_row]}

])

print(f"Canonical URLs written to Column {column_letter} with concurrency, retries, and caching.")